AITRICS, a Korean medical AI company, said Tuesday that two of its papers have been accepted at the International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2025—the world’s largest conference on speech, acoustics, and signal processing—held in Hyderabad, India, from April 6 to 11.

The accepted papers are titled: “Stable Speaker-Adaptive Text-to-Speech Synthesis via Prosody Prompting (Stable-TTS)” and “Face-StyleSpeech: Enhancing Zero-shot Speech Synthesis from Face Images with Improved Face-to-Speech Mapping.” AITRICS presented advanced speech AI techniques in two poster sessions.

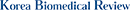

The first paper proposes a speaker-adaptive TTS (Stable-TTS) framework that naturally reproduces a specific speaker’s speech style and intonation using only a small amount of speech data. This model addresses the problem of unstable sound quality in existing speaker-adaptive speech synthesis models and is capable of stable synthesis even in limited and noisy environments.

The speaker-adaptive model maintains stability by utilizing high-quality speech samples for pre-training, a prosody language model (PLM), and prior-preservation learning. This enables more natural and stable speech generation and has been shown to be effective even with low-quality or limited speech samples.

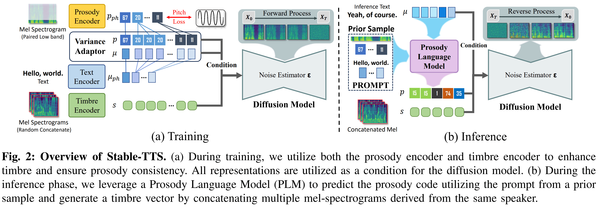

AITRICS has also developed a zero-shot TTS model that generates natural speech based solely on facial images. The model extracts speaker characteristics inferred from facial images and combines them with prosody codes to produce more realistic, natural speech. In particular, it maps facial information and voice style more precisely than existing face-based speech synthesis models, significantly improving speech naturalness.

“This research demonstrates that natural and stable voice generation is possible even with limited data,” said Han Woo-seok, a generative AI engineer at AITRICS. “It is expected to be useful in real-world medical environments where data is often scarce.”

“We believe this research is a stepping stone toward expanding beyond text-based LLMs (Large Language Models) to multi-modal LLMs that integrate voice and images. We will continue working toward reliable, user-friendly medical AI services through ongoing research and development,” Han added.

Related articles

- AITRICS publishes study on AI model for predicting clinical deterioration in emergency departments

- AITRICS earns NET certification for AI technology predicting sepsis and mortality

- Dong-A ST partners with AITRICS for digital healthcare collaboration

- AITRICS wins approval for AI patient deterioration prediction solution in Vietnam

- AITRICs to unveil smart AI medical questionnaire solution V.Doc at KIMES BUSAN 2024

- AITRICS wins Hong Kong approval for AI that predicts patient crises

- AITRICS study reveals informative presence of missing data in hospital AI models

- AITRICS links with Mayo Clinic Platform to test hospital AI in the US